Understanding machine consciousness through the Chinese Room Thought Experiment

Published by Blog Editor on

Understanding machine consciousness through the Chinese Room Thought Experiment

Introduction:

Chinese Room Thought experiment concept picture

Thinking about machine consciousness has been long thought about by human beings. The Chinese Room thought experiment, proposed by philosopher John Searle

John Searle by Matthew Breindel

in 1980, challenges the notion that machines can truly comprehend language. It has become a cornerstone of the ongoing debate surrounding artificial intelligence (AI) and machine consciousness. For data scientists, this thought experiment carries significant implications regarding the development and use of AI systems.

We need to remember here that in 1980, China was a very closed system and that todays close contact with the People Republic of China (PRC) in the Western World was not yet in place. It was only after Deng Xiaoping,

Deng Xiaoping

who ruled the PRC from 1978 to 1989, took power, that China’s economy opened up for reform. John Searle would have perceived Chinese as a language inaccessible to most people in the Western World.

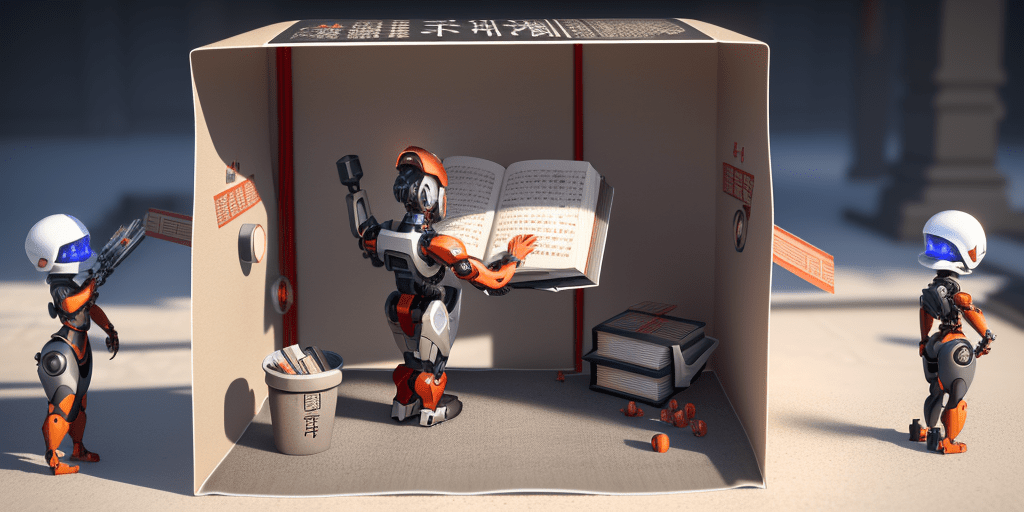

In the Chinese Room thought experiment, a person in a room receives Chinese characters without any prior knowledge of the language. However, they have access to a set of rules that enable them to respond appropriately to these symbols in Chinese. Despite not comprehending the language itself, someone outside the room could easily be convinced that the person inside understands Chinese based on the responses.

The experiment highlights the difference between weak and strong AI, with the former being limited to performing specific tasks and the latter being capable of human-level intelligence and consciousness, therefore machine consciousness.

Implications for Data Science

The implications for Data Science are that the Chinese Room thought experiment prompts reflection on the limitations of AI systems and their true understanding of human language and behaviour, as well as the broader context in which they operate.

One crucial challenge faced by AI systems is the problem of context, where they can recognize patterns and respond in a limited domain but struggle to understand the broader context of those patterns. This can result in misunderstandings and errors when dealing with complex human behaviour and anatomy.

Anatomically wrong Ai generated picture from the prompt “hand” showing a lack of machine consciousness

Another challenge is the problem of bias in AI systems. AI is only as objective as the data it is trained on, and if the data contains biases or inaccuracies, the AI system will reflect those biases. In areas such as finance, healthcare, and criminal justice, this can have serious consequences. The AI is trained on data from the internet. As the people that post on the internet can be racist, ageist, body shamers and worse, these aggressive biases are reflected in the AI models unless corrected by benevolent humans.

Conclusion

The conclusion is that the Chinese Room thought experiment provides a framework for understanding the limitations of AI systems and their true capacity for understanding human language and behaviour. Data scientists and all of humanity that is subjected often involuntary to data scientist, must be mindful of these limitations when developing and implementing AI systems to ensure their responsible and effective use.

The implications of AI’s capabilities have significant ramifications for humanity, who must exercise caution to avoid overestimating the potential of these systems. While AI is proficient in performing specific tasks, true comprehension or consciousness is beyond their reach. A machine that has never experienced life will not understand what life is.

One pertinent area of concern is natural language processing (NLP), the subfield of AI that deals with computer-human interaction in language. NLP systems are becoming increasingly sophisticated and versatile, enabling a variety of applications such as language translation, sentiment analysis, and chatbot interactions. It is crucial to recognize that even the most advanced NLP systems rely on a set of black box neural networks to operate. These networks facilitate pattern recognition in human language and appropriate responses, but they do not constitute genuine comprehension of meaning. For instance, an NLP system can correctly identify that the phrase “I’m feeling blue today” indicates sadness, but it does not truly understand the subjective experience of being sad.

This has significant implications for applications such as mental health chatbots,

Anxiety UK’s Mental Health Chatbot. Chatbots with today’s AI don’t show machine consciousness.

which rely on NLP to offer support to individuals with mental health concerns. A neural network has never experienced the loss of a loved one, never experienced abuse, bullying, fear or unemployment and can only soullessly echo the words of the people whose data it was fed. We must be cautious in the coming years when those data scientists and their companies that profit from these systems will try to sell us these systems as harmless.

One example is the use of psychological marketing techniques were cute characters

Cute characters to distract from potentially unethical uses of AI?

are used to downplay the dangers and threats that AI can pose to the very fabric of society. We must always remember that data science is not science, it is the use of scientific tools to operate for profit. And if big money is involved the people usually suffer. NLP can be used for the good of society and humanity, but in the moment it is largely controlled by a small set of very powerful and rich individuals and companies that can afford the computing resources to train these models.

The people in these companies will not do anything that harms their salaries and shareholder pay outs. It is up to us to make sure we can continue a prosperous life and use AI and specifically NLP for the good of humanity and not for the profit of the few. To summarise, the Chinese Room thought experiment serves as a potent reminder that while AI systems are highly effective at task-specific duties, they lack genuine understanding or consciousness. Humanity must keep these limitations in mind and maintain a critical approach to AI systems. By comprehending the possibilities and limitations of these systems, we can use them responsibly and effectively.

Please also watch the videos for Explainable AI, Artificial General Intelligence, a comparison of the Industrial Revolution to the AI Revolution and AI in Health Care.